OVERVIEW

Our laboratory focuses on future technologies in the field of accessibility and inclusivity. In this project, we wanted to see how visually impaired (blind or low vision) people take selfies and what their challenges are. Unfortunately, current assistive technology doesn't not provide sufficient guidance to take selfies, thus most people with visual impairments do not take selfies independently. To find an answer to this user problem, we conducted user interviews with 10 people that have visual impairments and later brainstormed novel assistive technology features to solve it.

TIMELINE

2 months

My Role

Understanding the User

MY TEAMMATES

Ricardo Gonzalez (Information Science Ph.D Student)

Cheng Zhang (Information Science Professor)

Find useful academic literature relevant to our case study

Writing and facilitating user interviews with visually impaired people

Contributing to design brainstorms

Writing final report

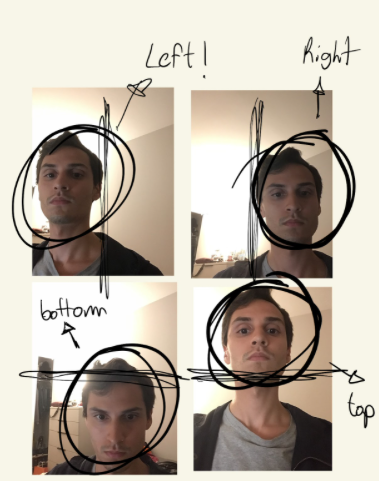

Four demonstrations of participants taking a selfie with their smartphone’s front-facing camera (P3, P6, P5, and P9). Participants are holding their phone pointing the frontal camera in the general direction towards their face.

Can you tell me about the most recent time you used your front camera on your phone?

We Asked Questions Like..

Which apps do you take selfies?

METHODS AND TOOLS USED

Remote User Interviews

Research Synthesis

To solve the problem, we must first understand it from the user’s perspective. I scripted a user interview, of which required inclusive wording for our user group. For instance, we can’t say “look at …” or “can you see…” because our population has vision impairments. Our team was initially using an outdated database of potential participants. When we didn’t yield our desired total, I decided to get creative and directly recruit people from a Reddit sub-community called “r/Blind”. This proved to be successful and we conducted the 10 interviews needed for our sample.

If your face wasn’t in the viewpoint of the camera, what part of the body would you prefer to be prompted to move?

Initial Findings

Most participants made it clear that they were unable to fulfill the action of selfie-taking individually and relied on assistance to meet their needs. One participant talked about how taking good selfies independently can affect their life using her business as an example: “you really get a feel for who they are and you get a feel for who they are as a person, in addition to who they are as a business owner. And I feel like I'm kind of missing out on that because I can't express myself in that way right now”. Participants also mentioned how the quality and appearance is particularly important to them whenever it comes to posting on social media and taking selfies: [Taking a photo for the dating app] was stressful because I kept thinking it's probably gonna be a really weird shot where I'm either cut off or I'm not quite in center or again, the whole eye thing where it's gonna look like I have a physical disability. And you're trying to put your best face forward.”

How might we help visually impaired people use their front camera more effectively?

SeeingAI

Functional Requirements

What Would Be Next

Inspiration

During our interview process, more than half of our participants mentioned using current assistive technologies and what their shortcomings are. AI has made object orientation and recognition feasible but when our users were trying to take selfies or record videos, both applications fell short. So, I made the recommendation to come up with functional requirements for our future application that’ll alleviate the current pain points users have.

After discussing what was missing in other assistive technologies, I presented the idea of us having functional requirements for ours. As a team, we decided on three priority sub-sections for our design. The first was that it has relative head positioning, meaning that if the person as aligned on the left, right, top or bottom, they will have auditory feedback confirming so. The second was that it is able to locate a person’s relative head position if they were to turn it on either side. Last was it the user wants their head to be tilted, the application will give auditory feedback when it the tilted position is preferable.

As the school year ended as this project was unfinished, there would’ve been further work to do. Next, we would have continued to prototype potential solutions based on the feedback we’ve received and the functional guidelines we’ve created for our application. There would be user testing and wire framing for different iterations of it as well. We wrote an academic paper of this study that was later published in ACM SIGACCESS Conference on Computers and Accessibility.

Google Lookout